Web 2.0 signals a major change in the software market – we are moving to a platform where users can create and disseminate content using powerful, desktop-replacement applications on the web. Rich User Experiences is a design pattern that Web 2.0 exploits to deliver desktop like applications, powered by JavaScript, XML, AJAX, SOAP and REST technologies. These web apps provide the same features as their desktop counterparts, but have the added advantage of being connected and available anywhere there is an Internet connection. Multiple and lengthy installations are a thing of the past, and data is liberated and can be shared with friends and colleagues.

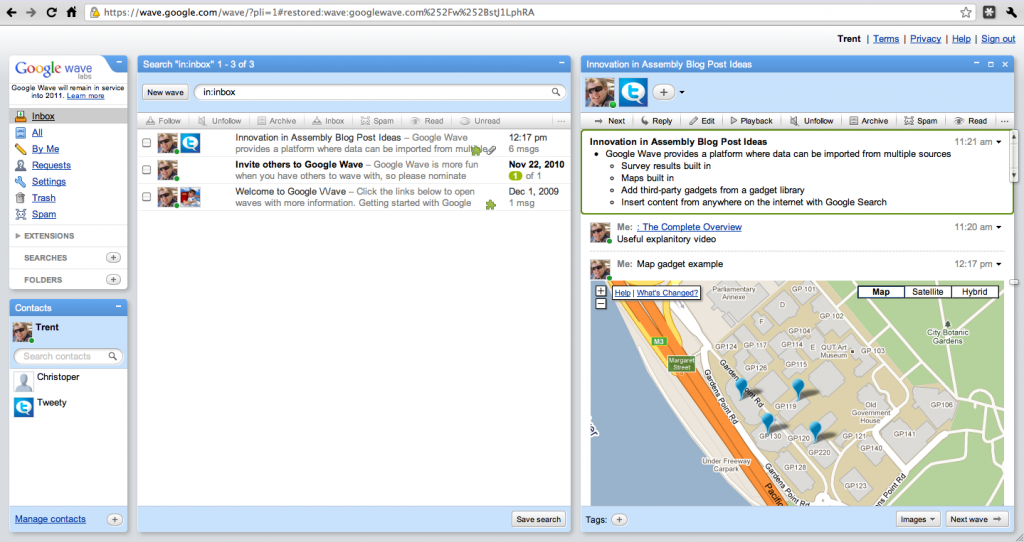

There are a lot of examples for Web 2.0 applications which make use of engaging interaction to provide a rich experience – sites like Microsoft Photosynth, certain advanced features of YouTube like Leanback and the Queue, and tailored experiences for mobile version of apps (like Google Search with their Instant Preview on mobile). These products and systems all have specific goals and offer a tailored solution to a specific consumer need, while also making use of strategies for rich user experience.

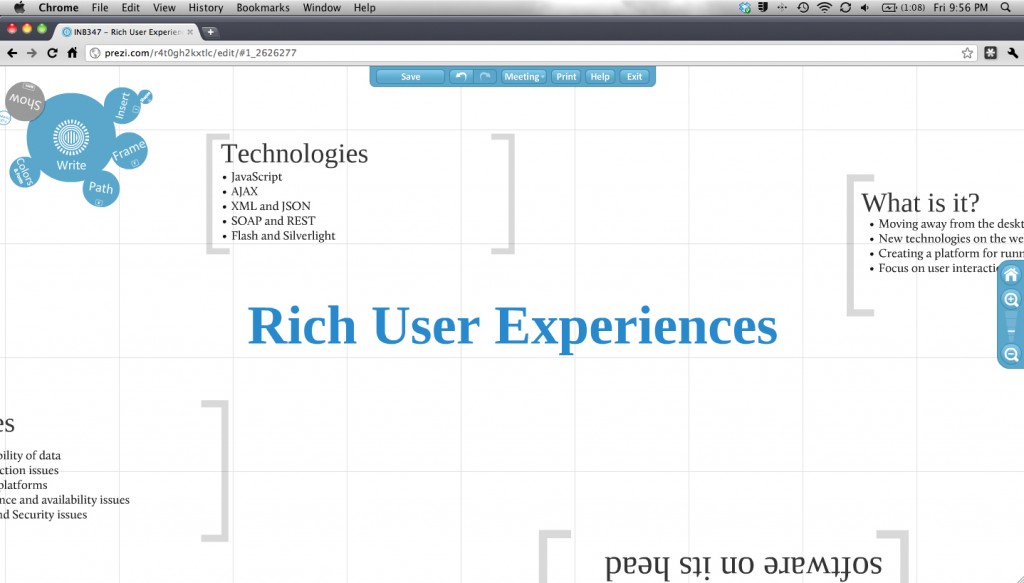

One very unique application in the domain of rich user experience online software is Prezi. Prezi is, as you may guess, a presentation program. This may make it sound like Microsoft PowerPoint, but this product is nothing like the presentation programs we are use to on the desktop. Prezi is a zooming presenter – there is no concept of pages or slides. Everything is on one large canvas that can have text, headings, images and video embedded, and then “paths” (the navigation structure) placed over top to control the presentation flow. This means there has been a lot of effort to tailor the presentation development process to match the goals of the system and also the capabilities of the web.

Prezi is a very unique example of tailoring an existing desktop application and adapting it to suit the patterns employed by Web 2.0. The Prezi system is available across platforms – any desktop or notebook via any modern web browser and also on Apple’s iPad, and includes similar tools to a desktop presentation app – minus some more advanced features such as transitions and build-ins. This in itself seems like Prezi just hasn’t bothered implementing these features because they are unimportant or are technically impossible. On the contrary, Prezi is simply focusing the core components required to get innovative and exciting presentation software onto the web. This is an important factor, as the simplicity in designed creates a focus on a compelling workflow. This differentiation is critical to focus the use on Prezi’s core competency – engaging and highly visual presentations. This reduction and condensation of tool set also simplifies the use process – users can learn quickly. All controls are highly visual and designed to be natural to use. The only confusing part of Prezi is letting go of preconceptions of what a presentation is, and this means the user needs to consider if they can work with such a radically different presentation paradigm.

Looking at the parts that Prezi doesn’t do so well at, the main issue is deep personalisation. While tools are easy to get to, there is no “shortcut” system where the most frequent tools are made easier to access. There is no setting to automatically remember a user’s preferred security/Prezi visibility setting. These issues are easily fixed, and aren’t critical, but would add to the entire experience. Overall though, Prezi integrates the best practice for the Rick User Experience Web 2.0 pattern.

As part of my personal commitment to practicing what I preach, I created a basic Prezi about this weeks content. It is embedded below.

Prezi will give me a new way to communicate ideas with an audience. How could you use Prezi?

References

Ray, B. (2011). Google squeezesthumbnails into mobile search. Retrieved April 1, 2011 from http://www.theregister.co.uk/2011/03/10/google_preview/

Stewart, A. (2007). User Experience, Rich Internet Applications and the Future of Software. Retrieved April 1, 2011 from http://www.zdnet.com/blog/stewart/user-experience-rich-internet-applications-and-the-future-of-software/256